Classification of Data Structure

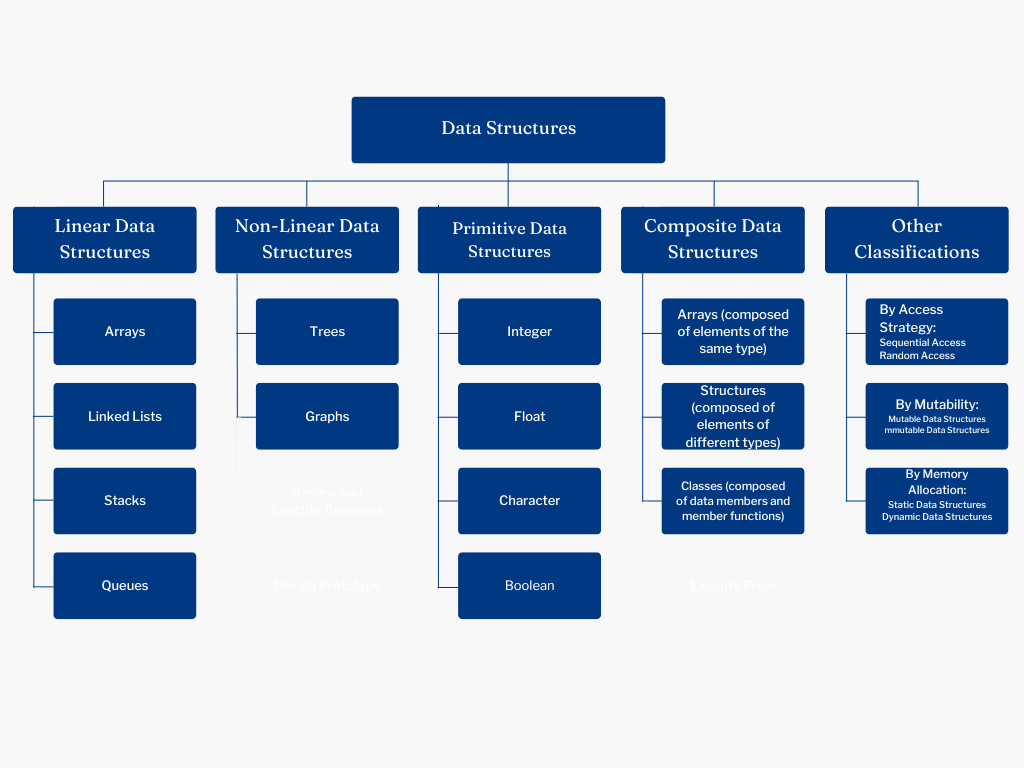

The classification categorizes them based on their organization, behavior, and relationship between elements. This systematic categorization enables a better understanding of the characteristics, properties, and applications of different types of data structures. Common Classification include linear data structures, where elements are arranged sequentially, and non-linear data structures, where elements have multiple predecessors and successors. Additionally, data structures can be classified as primitive or composite, based on their composition of fundamental data types. Understanding the classification is essential for selecting the appropriate structure to solve specific problems and optimize algorithmic solutions.

Table of Contents

1. Linear Data Structures

Linear data structures are data structures in which elements are arranged sequentially, with each element having a direct predecessor and successor. These structures represent a linear sequence of elements and are characterized by their simplicity and ease of traversal.

Common examples of linear data structures include:

- Arrays: Arrays are a collection of elements stored at contiguous memory locations. Elements in an array are accessed using their index, and the order of elements is fixed.

- Linked Lists: Linked lists consist of nodes where each node contains a data element and a reference (or pointer) to the next node in the sequence. Linked lists can be singly linked (each node points to the next node) or doubly linked (each node points to both the next and previous nodes).

- Stacks: Stacks are a Last-In-First-Out (LIFO) data structure where elements are added and removed from the same end, called the top of the stack. Operations include push (add an element to the top) and pop (remove the top element).

- Queues: Queues are a First-In-First-Out (FIFO) data structure where elements are added at the rear (enqueue) and removed from the front (dequeue). This ensures that elements are processed in the order they were added.

Linear data structures are commonly used in various applications and algorithms due to their simplicity and predictable behavior. They are suitable for scenarios where data needs to be processed in a sequential manner or where the order of elements is significant. Additionally, linear data structures provide efficient insertion and deletion operations, making them versatile for a wide range of applications.

2. Non-Linear Data Structures

Non-linear data structures are data structures in which elements are not arranged sequentially and do not have a strict hierarchical relationship. These structures allow for more complex relationships between elements, such as branching or interconnected relationships.

Common examples of non-linear data structures include:

- Trees: Trees are hierarchical data structures consisting of nodes connected by edges. Each node has a parent-child relationship, with one node being the root (topmost node) and other nodes being internal or leaf nodes. Trees are widely used for representing hierarchical data such as file systems, organizational charts, and XML/HTML documents.

- Graphs: Graphs consist of a set of vertices (nodes) and edges (connections) between them. Unlike trees, graphs allow for arbitrary connections between vertices, enabling the representation of complex relationships such as networks, social connections, and road maps. Graphs can be directed (edges have a direction) or undirected (edges have no direction) and may contain cycles (loops).

Non-linear data structures provide flexibility for representing diverse relationships and structures in various applications. They are suitable for scenarios where elements have complex interdependencies or where hierarchical relationships are not strictly defined. Additionally, non-linear data structures support advanced algorithms for traversal, searching, and pathfinding, making them essential for solving complex computational problems.

3. Primitive Data Structures

Primitive data structures, also known as elementary or basic data structures, are fundamental data types provided by programming languages to represent simple values. These data types are directly supported by the language and are used to store individual values rather than complex structures. Primitive data structures are typically immutable, meaning their values cannot be modified once they are created.

Common examples of primitive data structures include:

- Integer: Represents whole numbers without any decimal points (e.g., 1, -5, 100).

- Float (Floating-point Number): Represents real numbers with decimal points (e.g., 3.14, -0.5, 2.718).

- Character: Represents a single character from a character set (e.g., ‘a’, ‘B’, ‘$’).

- Boolean: Represents a logical value indicating true or false.

These primitive data types serve as the building blocks for more complex data structures and are used to represent basic values in programs. They are often manipulated directly by programming language operators and functions and are stored in memory according to the language’s data storage conventions. Primitive data structures are essential for performing basic arithmetic operations, comparisons, and logical operations in programming.

4. Composite Data Structures

Composite data structures, also known as non-primitive data structures, are data structures that are composed of multiple primitive or composite data types. Unlike primitive data structures, which represent simple values, composite data structures are used to organize and manage collections of data in a more complex manner. They allow for the creation of hierarchical or interconnected structures that can represent more sophisticated data relationships.

Common examples of composite data structures include

- Arrays: An array is a collection of elements, each identified by an index or key, that stores values of the same data type in contiguous memory locations.

- Structures (Structs): Structures allow you to group together variables of different data types under a single name, enabling the creation of custom data types with multiple attributes.

- Classes: Classes are similar to structures but also include member functions (methods) that operate on the data stored within the class. They are a fundamental concept in object-oriented programming.

- Records: Records are similar to structures but are used in database systems to represent a single entity or record with multiple fields or attributes.

Composite data structures provide greater flexibility and expressiveness compared to primitive data structures, allowing programmers to model complex data relationships and build more sophisticated data processing systems. They are essential for representing real-world entities and organizing data in a structured and efficient manner. Additionally, composite data structures often form the basis for higher-level data structures and abstract data types, enabling the development of more advanced algorithms and data processing techniques.

5. Static vs Dynamic Data Structures

Static and dynamic data structures are two categories of data structures distinguished by their behavior in terms of memory allocation and size flexibility:

Static Data Structures

- Static data structures have a fixed size determined at compile time and cannot be resized during program execution.

- Memory allocation for static data structures is done at compile time, and the size remains constant throughout the program’s execution.

- Examples of static data structures include arrays and fixed-size arrays in languages like C and C++.

- Static data structures are efficient in terms of memory usage and access time but lack flexibility in accommodating changing data requirements.

Dynamic Data Structures

- Dynamic data structures can adjust their size dynamically during program execution to accommodate changing data requirements.

- Memory allocation for dynamic data structures is done at runtime, allowing them to grow or shrink as needed.

- Examples of dynamic data structures include linked lists, dynamic arrays (e.g., ArrayList in Java), stacks, queues, trees, and graphs.

- Dynamic data structures offer flexibility in managing data and can efficiently handle scenarios where the size of data is unknown or varies over time.

Comparisons:

| Aspect | Static Data Structures | Dynamic Data Structures |

|---|---|---|

| Memory Allocation: | Static data structures allocate memory at compile time | dynamic data structures allocate memory at runtime. |

| Size Flexibility: | Static data structures have a fixed size | dynamic data structures can resize dynamically |

| Usage: | Static data structures are suitable for scenarios where the size of data is known in advance and remains constant, | dynamic data structures are preferred when the size of data varies or needs to be adjusted dynamically |

In summary, the choice between static and dynamic data structures depends on factors such as memory efficiency, access time requirements, and the flexibility needed to accommodate changing data sizes. Each type of data structure has its advantages and is suitable for specific use cases based on the requirements of the application.

6. Homogeneous vs Heterogeneous Data Structures

Homogeneous and heterogeneous data structures are classifications based on the uniformity or diversity of the elements they contain:

Homogeneous Data Structures

- In homogeneous data structures, all elements stored within the structure are of the same data type.

- These data structures are characterized by uniformity, where each element shares the same data type and structure.

- Examples of homogeneous data structures include arrays, where all elements are of the same data type (e.g., integer array, character array).

Heterogeneous Data Structures

- Heterogeneous data structures contain elements of different data types.

- These data structures allow for diversity, enabling storage of elements with varying data types and structures within the same container.

- Examples of heterogeneous data structures include structures (structs) and classes in programming languages like C++ and Java, where each instance can contain multiple attributes of different data types.

Comparison:

| Aspect | Homogeneous | Heterogeneous |

|---|---|---|

| Data Type Consistency: | Homogeneous data structures enforce uniformity in data types | heterogeneous data structures allow for diversity |

| Usage: | Homogeneous data structures are suitable for scenarios where all elements have the same data type and structure, providing simplicity and efficiency in accessing elements | Heterogeneous data structures are preferred when storing elements with different data types or structures is necessary, enabling greater flexibility and expressiveness in representing complex data relationships |

| Examples: | Arrays are a common example of homogeneous data structures | while structures (structs) and classes are examples of heterogeneous data structures. |

In summary, the choice between homogeneous and heterogeneous data structures depends on the nature of the data being stored and the requirements of the application. Homogeneous data structures offer simplicity and efficiency for uniform data types, while heterogeneous data structures provide flexibility and versatility for handling diverse data types and structures within the same container.

7. Linear vs Non-Linear Memory Representation

Linear and non-linear memory representation are two ways of organizing and accessing memory in data structures:

Linear Memory Representation

- In linear memory representation, data elements are stored in a contiguous block of memory.

- Elements are accessed sequentially, with each element having a direct predecessor and successor.

- Common examples of data structures with linear memory representation include arrays and linked lists.

Non-Linear Memory Representation

- In non-linear memory representation, data elements are stored in memory in a non-sequential or hierarchical manner.

- Elements may have multiple predecessors and successors, and their arrangement does not follow a linear sequence.

- Examples of data structures with non-linear memory representation include trees, graphs, and multi-dimensional arrays.

Comparison:

| Aspect | Linear | Non-Linear |

|---|---|---|

| Memory Organization: | Linear memory representation organizes data elements in a sequential manner | Non-linear memory representation allows for more complex arrangements, such as hierarchical or interconnected structures |

| Access Pattern: | Accessing elements in linear memory representation typically involves sequential traversal. | Whereas accessing elements in non-linear memory representation may require specialized traversal algorithms, such as depth-first or breadth-first search |

| Examples: | Arrays and linked lists are common examples of linear memory representation | While trees and graphs are examples of non-linear memory representation. |

In summary, the choice between linear and non-linear memory representation depends on the structure and relationships of the data being stored. Linear memory representation is suitable for scenarios where data elements have a linear sequence and are accessed sequentially, while non-linear memory representation is preferred for representing more complex relationships and hierarchical structures. Each type of memory representation offers unique advantages and is selected based on the requirements of the application and the characteristics of the data being processed.

8. Primitive Operations vs Complex Operations

Primitive operations and complex operations are two categories of operations performed on data structures, distinguished by their simplicity or complexity:

Primitive Operations

- Primitive operations are basic operations directly supported by the data structure or underlying programming language.

- These operations typically involve simple tasks such as accessing, inserting, deleting, or updating individual elements within the data structure.

- Examples of primitive operations include:

- Accessing an element at a specific index in an array.

- Inserting a new element at the end of a list.

- Deleting an element from a set.

- Primitive operations are often the building blocks for more complex operations and algorithms.

Complex Operations

- Complex operations involve a combination of primitive operations or higher-level processing to achieve a specific task or functionality.

- These operations may require multiple steps, iterations, or conditional logic to perform complex data manipulations or transformations.

- Examples of complex operations include:

- Searching for an element with a specific value in a binary search tree (which involves traversing the tree and comparing values).

- Sorting elements in an array using a sorting algorithm such as quicksort or mergesort.

- Performing a graph traversal algorithm to find the shortest path between two nodes.

- Complex operations often have higher time complexity and may require more computational resources compared to primitive operations.

Comparison:

| Aspect | Primitive Operations | Complex Operations |

|---|---|---|

| Complexity: | Primitive operations are simple and involve basic tasks | While complex operations are more intricate and involve multiple steps or processing |

| Functionality: | Primitive operations perform fundamental tasks such as accessing or modifying individual elements. | While complex operations implement higher-level functionalities or algorithms. |

| Efficiency: | Primitive operations are typically more efficient in terms of time and space complexity compared to complex operations | Which may require additional computational resources |

| Usage: | Primitive operations are used as building blocks for implementing complex algorithms and operations | While complex operations are applied to solve more advanced problems or tasks |

- Complexity: Primitive operations are simple and involve basic tasks, while complex operations are more intricate and involve multiple steps or processing.

- Functionality: Primitive operations perform fundamental tasks such as accessing or modifying individual elements, while complex operations implement higher-level functionalities or algorithms.

- Efficiency: Primitive operations are typically more efficient in terms of time and space complexity compared to complex operations, which may require additional computational resources.

- Usage: Primitive operations are used as building blocks for implementing complex algorithms and operations, while complex operations are applied to solve more advanced problems or tasks.

In summary, primitive operations are basic tasks directly supported by data structures, while complex operations involve higher-level processing or algorithms to achieve specific functionalities. Understanding the distinction between primitive and complex operations is essential for analyzing the efficiency and performance of algorithms and data structure operations.

- Primitive Operations: Basic operations directly supported by the data structure.

- Example: Insertion, deletion in arrays

- Complex Operations: Higher-level operations built upon primitive operations.

- Example: Sorting, searching in arrays

Conclusions

Understanding the classification helps in selecting the appropriate structure for solving specific problems, optimizing algorithmic solutions, and designing efficient software systems. Each type of data structure has its own characteristics, advantages, and optimal use cases, making it important to choose wisely based on the requirements of the problem at hand.

FAQs

What is data structure?

Data structures are fundamental components of computer science that organize and store data in a way that enables efficient access, manipulation, and retrieval. They provide a framework for representing and managing information within computer programs, facilitating tasks such as searching, sorting, and processing data. In essence, data structures serve as the building blocks upon which algorithms operate, playing a critical role in problem-solving and software development.

Why Study Data Structures?

Studying data structures is crucial for developing efficient algorithms, optimizing program performance, and solving complex computational problems. Understanding data structures enables programmers to organize and manage data effectively, facilitating tasks such as searching, sorting, and processing data. Mastery of data structures is essential for designing robust software systems, improving code readability, and enhancing problem-solving skills. By studying data structures, programmers gain insights into algorithmic paradigms, performance analysis, and memory management techniques, empowering them to develop scalable and maintainable software solutions. Overall, data structures form the foundation of computer science and are indispensable for mastering the art of programming.

Data Structures Interview Questions : A Comprehensive Guide

Read Article in Medium